Secure by design has never been a precise idea. The roots of the concept trace back to the 1970s, but it has recently surged back into prominence with CISA's secure by design guidance and pledge. Security teams, vendors, and regulators now repeat the phrase like a mantra, yet when you press for a definition you'll get a dozen different answers. Depending on who's speaking, it might mean threat modeling workshops, memory-safe languages, disclosure programs, bug bounties, SBOMs, SAST gates, or simply "shift left." All worthwhile practices, but lumped together under one broad banner, they have turned a specific idea into a catch-all slogan.

The concept still matters: security should be built in, not bolted on. Yet many of the practices labeled as secure by design are quite literally bolt-ons that come into play later in the SDLC, rather than true design commitments made upfront. To attain secure by design we need to lead with design not just work back from CVEs and vulnerabilities to define tactics.

In this article, we’ll give an overview into secure by design as it exists today, its challenges and how we as an industry can reframe the principle to create a roadmap to successful adoption and implementation.

Where secure by design drifts from design

CISA's position in Shifting the balance of cybersecurity risk: Principles and approaches for secure by design software is that vendors should take responsibility for customer security outcomes and ship products that are secure to use out of the box. Secure by default is the companion idea: strong protections should be enabled without extra cost or complex configuration. The message is blunt: the software industry does not need more security products; it needs more secure products.

The secure by design initiative sets the right tone and direction for accountability, transparency, and leadership, but most of its principles and tactics sit outside the actual design phase of software development. Executive sponsorship, metrics, SBOM sharing, and public reporting all shape how an organization behaves, but they do not tell software builders what to design. They are organizational and operational guardrails, not design and planning practices.

The real design layer is tangible. It includes design and architecture reviews, secure patterns and paved-road, threat modeling, security frameworks and requirements, all addressed before a line of code is written. This is where security and design actually intersect.

Bringing order to the secure by design chaos

If secure by design is to be actionable, it needs to be specific and concrete. The term has grown so broad that it can cover leadership buy in, threat modeling, and everything in between. There is a suite of related terms at our disposal to tighten up secure by design. Namely, secure by design, secure by default, design security, and secure design. Unfortunately they are often mixed up or misapplied, and at this point barely anyone understands their differences.

Here’s a quick breakdown of the differences between them all:

- Secure by design is the philosophy. It’s the idea of systematically integrating security into every aspect of software development: architecture, requirements, development, deployment, and maintenance. It is the broadest and most comprehensive term here.

- Secure by default is a principle that says the product should ship with safe defaults, making the secure choice the easiest and the insecure choice the intentionally explicit one.

- Design security is the general practice of considering security during the design phase. It is broad and not tied to a methodology or philosophy.

- Secure design is the outcome designs that already incorporate specific security controls to address risks that were identified.

As an industry we have spent years focused on the philosophy, the ideal of what secure by design should be. But philosophy does not make software safer. Without practices, measurable outcomes, and principles that guide design and security decisions, the concept stays abstract and aspirational. Making secure by design real means moving from intention to implementation, from stating what we believe to building what we can prove.

Design security is no small feat

Embedding security at the design stage sounds elegant but proves difficult in practice. True design security requires time, information discovery, domain specific skill, collaboration, and a culture where engineering, product, and security teams are aligned on priorities.

Developers describe impossible tradeoffs between release velocity and secure architecture, management using “shift left” as a cost-saving slogan, and offshore teams working under time and skill constraints that make structured design reviews unrealistic. Others point to a skills gap: few developers are trained to spot subtle logic flaws or unsafe assumptions at design time, and those few product security experts who can are not always brought in early enough or overruled in light of delivery deadlines.

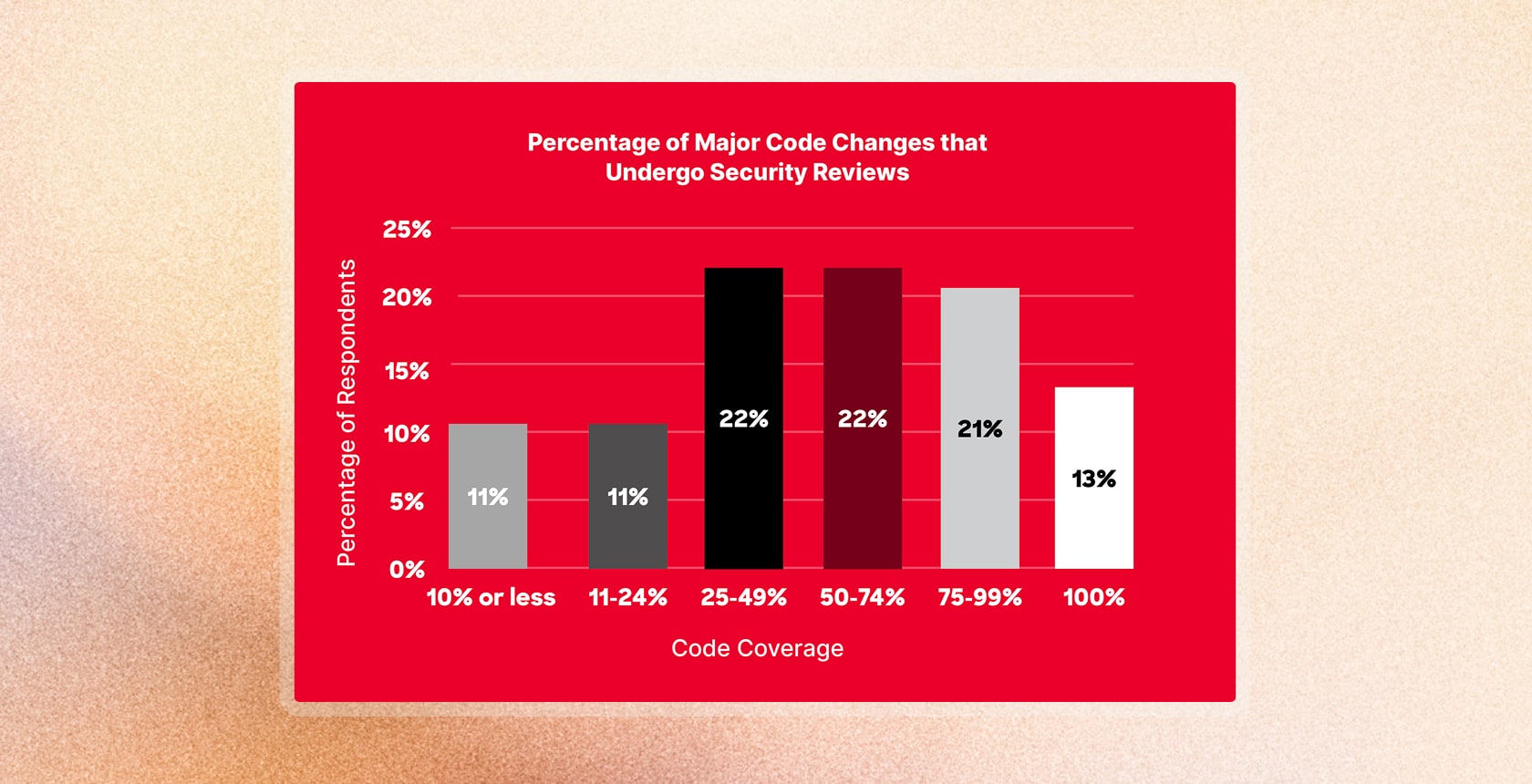

According to CrowdStrike’s 2024 State of Application Security Report, 54 percent of organizations admit that fewer than half of their code changes undergo any form of security review before release. Seventy percent say it takes 12 hours or longer to resolve critical vulnerabilities once discovered. That delay reflects how reactive most programs remain. Security still enters the process too late, and even when design reviews happen, they often give way to speed-to-market pressure and fragmented ownership.

Design security demands more than intention. It requires time to explore misuse cases, authority to challenge feature requirements, and a baseline of engineering maturity that allows teams to integrate security decisions without grinding development to a halt. The problem is not that teams disagree with the concept of secure by design, but that few have the margin, structure, or skills, at scale required to make it real.

The secure by design challenge just became exponential, but there’s a silver lining

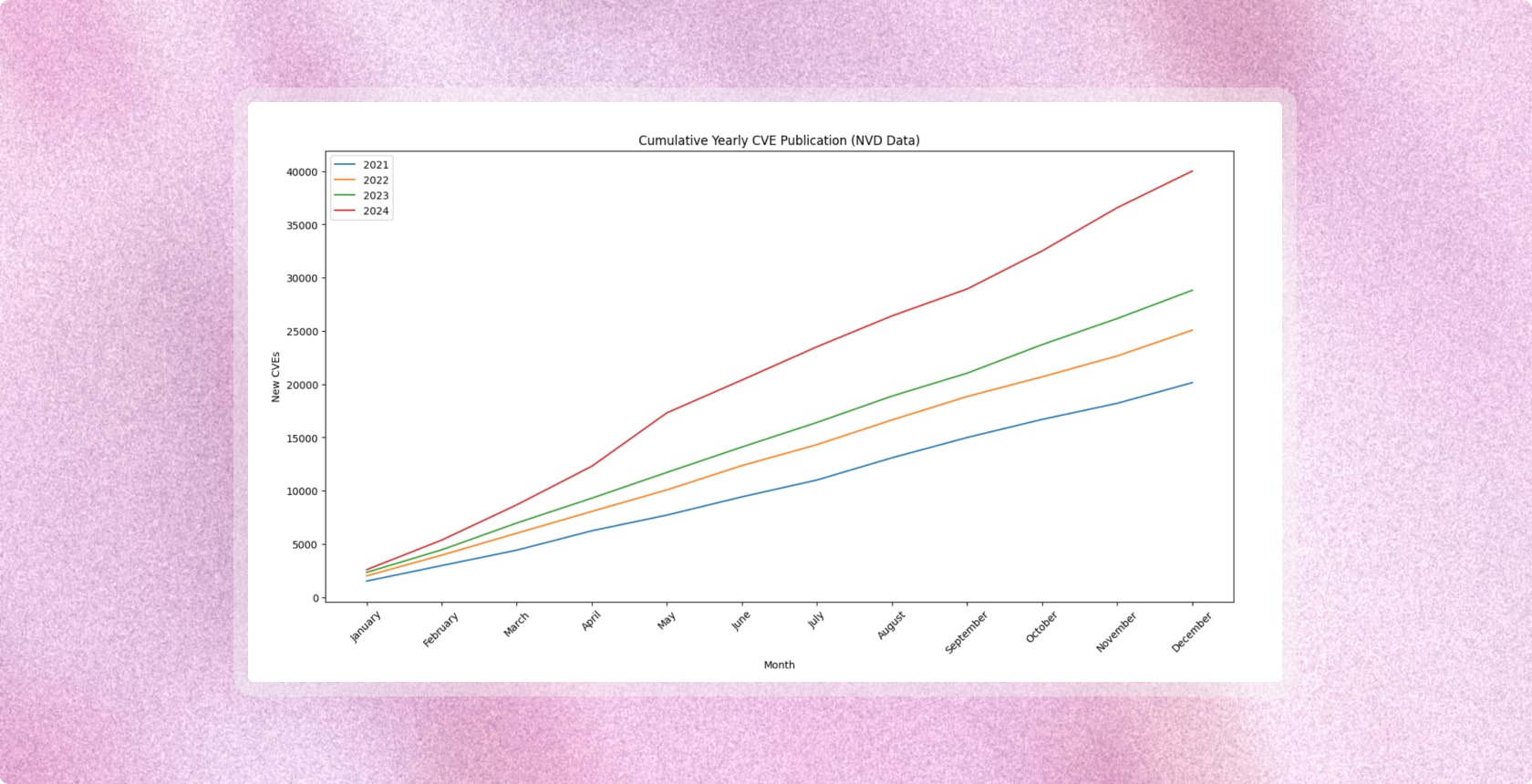

Unless you’ve been living under a rock, it should be no shock that the state of vulnerabilities is only getting worse. Disclosures keep rising year over year, and that is only what we’re aware of at the moment. Organizations already struggle to triage, prioritize, and patch at the pace issues appear, leaving a growing backlog.

This was a growing problem back when we were just talking in terms of good old fashioned human developers and bad actors. Now AI-native development is accelerating velocity across the board, so the problem is compounding. The existing backlog remains, the pressure to patch and prioritize persists, and we are generating more code faster than ever. Not to mention the fact that bad actors are increasingly leveraging LLMs to both discover new attack vectors but also as a new attack vector in its own right.

There is a silver lining. The same AI that is jet propelling development can be applied to the bottlenecks above: it can close skills gaps with just-in-time design reviews; auto draft and sanity check threat models and architecture reviews; flag unsafe patterns and dependency risks before code merges; generate paved roads and secure default configs; triage the flood of potential risks and threats so humans focus on the highest impact work; and automate design security workflows for both human in the loop security reviews and direct to developer with copilots that provide expert security guidance and safe code suggestions. Used this way, AI amplifies scarce expertise, turns design context into consistent security artifacts, and quantifies previously qualitative design risk across products.

What’s next for secure by design?

This article sets the foundation for how we think and talk about secure by design. We unpacked the confusion around the term, examined CISA’s framing, and argued that most of what is called secure by design today happens after design is finished.

In the next posts of this series, we’ll dive deeper into the secure by design progression we laid out earlier and explore each layer in depth to provide an adoption and actualization roadmap, starting with design security.