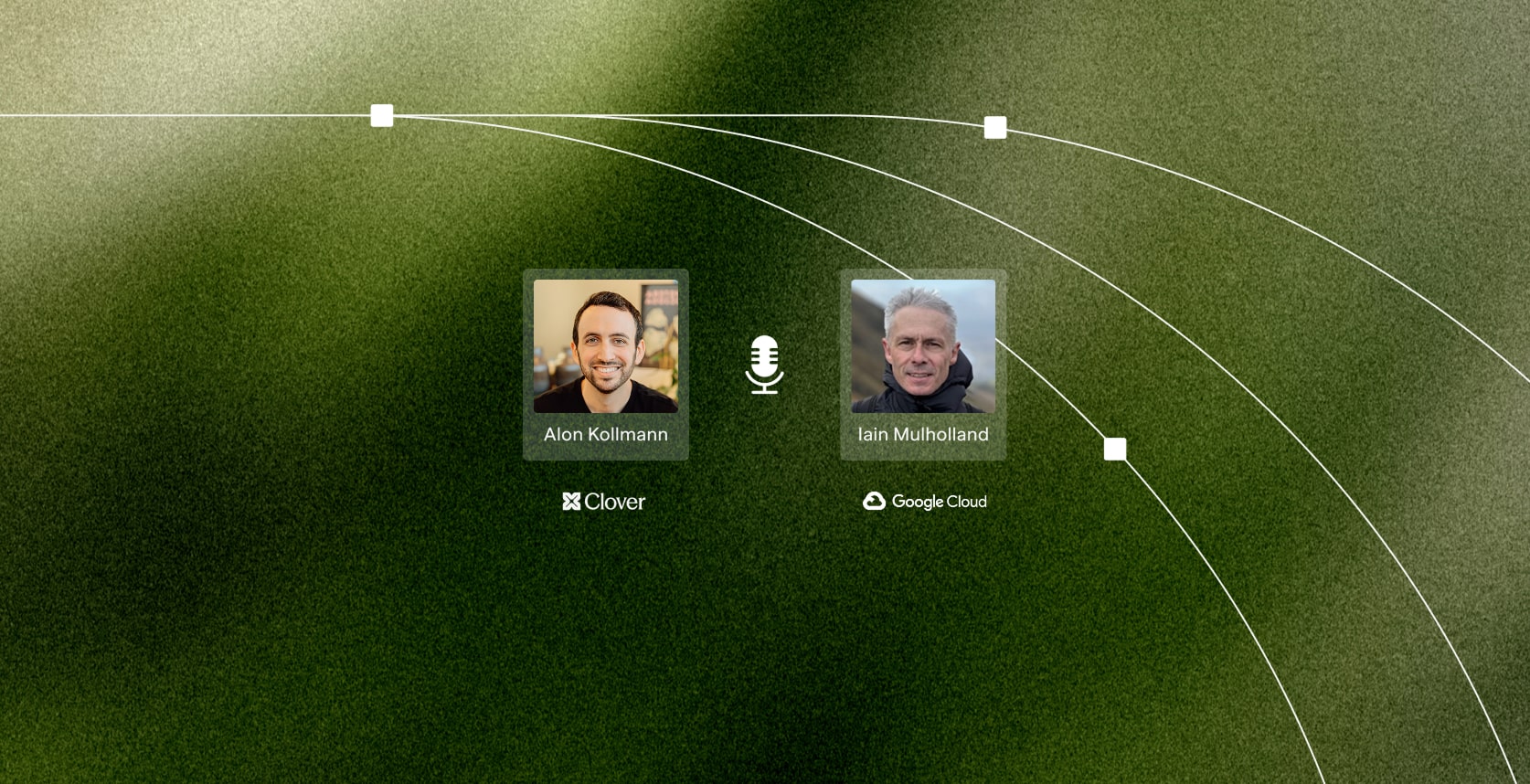

Note from the authorI recently got a chance to sit down with Iain Mulholland, the Senior Director, Cloud CISO at Google Cloud, to explore what it really means to build secure by design in an era of AI-native development.

We had a wide-ranging conversation that covered everything from how AI is changing the developer workflow, to how organizations can embed guardrails without slowing innovation. At its core, we wanted to get at how AI is making “secure by design” tangible, not just a slogan or a compliance requirement. Below, Iain discusses some of the points that we touched upon in our discussion, all of which are extremely important to understand at this crucial point in product security development.

The AI impact on product security

I'm excited about what AI can do with regard to product security. Google has been around this space for a long time and yet, we still have the same issue of scale: There are simply never enough security engineers. You could give me a thousand more security engineers, and I still wouldn't be able to cover everything that I’d want to cover. But AI changes all that, particularly in the product security space, because it can be present all the time and do it at scale.

Traditional tools are very good at identifying issues, but ultimately, that breaks down when the suggestion just ends up sitting in a queue for some developer to go and manually fix. The ability for AI to not only identify the issue, but then actually go and fix the issue, is a gamechanger and very scalable.

Another point is that AI can be there all day, every day, and more critically, built directly into automation workflows. Many processes today are asynchronous: They rely on the security engineer being available and to assist in a consultative type role. That role really ends up being a Monday-to-Friday type of job. If you have an AI that’s integrated into your CI/CD pipelines, it's present all the time, and a built-in part of the continuous flow.

Watch full interviewProduct security vs application security

In traditional application security, legacy tools such as SAST or SCA, scan the products and then they create a backlog of issues that have to be remediated, all of which happens outside of the development process. The teams developing products are going through the build process, doing beta releases, and working on getting that product to market. Product security thinks about it much more holistically.

Product security looks at the purpose of the product and sees it as a business problem to solve. It’s about being involved in the holistic process of building a product so it's much more integrated and focused on realtime.

There is a transition that has to take place between the AppSec model and the product security approach. How do we move security engineering teams from being blockers to actually business enablers? How do you actually understand where your product teams are? What are their business objectives? How do you fit into all that and ensure that you're adding momentum to the business, not taking it away? How do you move from being the team that says “No” to the team that is figuring out ways to say “Yes”?

AI is already being used significantly for code development and product development, and that's pretty exciting. We’re nearing a world where we are going from having “software engineers” to having “software designers.” It’s a world where a developer turns to LLMs to take a prompt to build an application that solves a certain problem, describing how the user interface should look, providing the data sources. That’s where design-led product security is important. It takes those inputs from the developer and applies the proper security policies and security guardrails automatically. It finds the secure ways to access that data source the developer wants to use, whether it’s by using a specific connection method, or a type of encryption, or any other rule that the product security team has codified.

How do we describe in language that AI can use and apply our security guardrails, our security principles, our known vulnerabilities? That last part about known vulnerabilities is really critical, because AI allows us to also understand where our developers are making mistakes, where the failure patterns are, and then actually catch those patterns and describe them as patterns, and ultimately correct them.

The well-lit paths of building secure by design

At Google Cloud it's very important for us to include security at the design level. We’ve recently signed on as part of the US government’s Secure by Design Pledge, but frankly, we've been doing security by design for a very long time. It's an expectation, a very reasonable expectation, of our customers. The question is simple: How do we create well lit paths for our developers to do the right thing?

We look at it through a number of ways. One of those ways is by directing our partners and developers towards the well-lit paths: Use this library, use this framework, use this vendor, and you're going to get all of the security that you need for free. You don't actually need to devote more time to thinking about it. It takes out the friction and makes things much easier.

The second thing is making sure that devotion to security by design is expressed to customers. Sometimes, the customer needs to make a choice. Large enterprises have their own needs, and it may not be clear to them what they need to be able to do in order to become secure by design. That’s why one of the things that we will do is make deliberate choices about default settings. Should we apply the secure by design default which is more secure, and then allow the customer to disable that security setting if their use case requires them to be less secure, say as for integrating with a legacy application. That’s also why we work very closely with our development teams to have the product shipped in a more secure state, while giving the customer the awareness that, if necessary, they can back that off.

These are examples of very conscious choices and well-lit paths. It’s really more a question of how far we can go with this approach.

How Clover is changing product security

As a product security leader, my challenge has always been how to scale and how do I get deeper into the product space, even to the point where our customers are. A major obstacle is that there's just never enough security engineers. Taking an AI driven approach is an exciting opportunity in this regard, because AI allows scale and increases productivity. And Clover helps do that.